People Counting with OpenCV, Python & Ubidots

Digital Image Processing (DIP) is growing fast, thanks in large part to the increase in available Machine Learning techniques that developers can access via the cloud. Being able to process digital images over the cloud bypasses any dedicated hardware requirements, ultimately making DIP the go-to choice. As the cheapest and most versatile method for processing images, DIP has found wide-ranging applications. One of the most common is pedestrian detection and counting – a useful metric for airports, train stations, retail stores, stadiums, public events, and museums.

Traditional, off-the-shelf people counters aren’t just expensive – the data they generate is often tied to proprietary systems that limit your options for data extraction and KPI optimization. Conversely, an embedded DIP using your own camera and SBC will not only save time and money, but will also give you the freedom to tailor your application to the KPIs you care about, and extract insights from the cloud that wouldn’t otherwise be possible.

Using the cloud to enable your DIP IoT application allows for enhanced overall functionality. With increased capabilities in the form of visualizations, reporting, alerting, and cross-referencing outside data sources (such as weather, live vendor pricing, or business management systems), DIP gives developers the freedom they desire.

Picture a grocer with an ice cream fridge: they want to track the number of people who pass by and select a product, as well as the number of times the door was opened and the internal temperature of the fridge. From just these few data points, a retailer can run correlation analysis to better understand and optimize their product pricing and the fridge’s overall energy consumption.

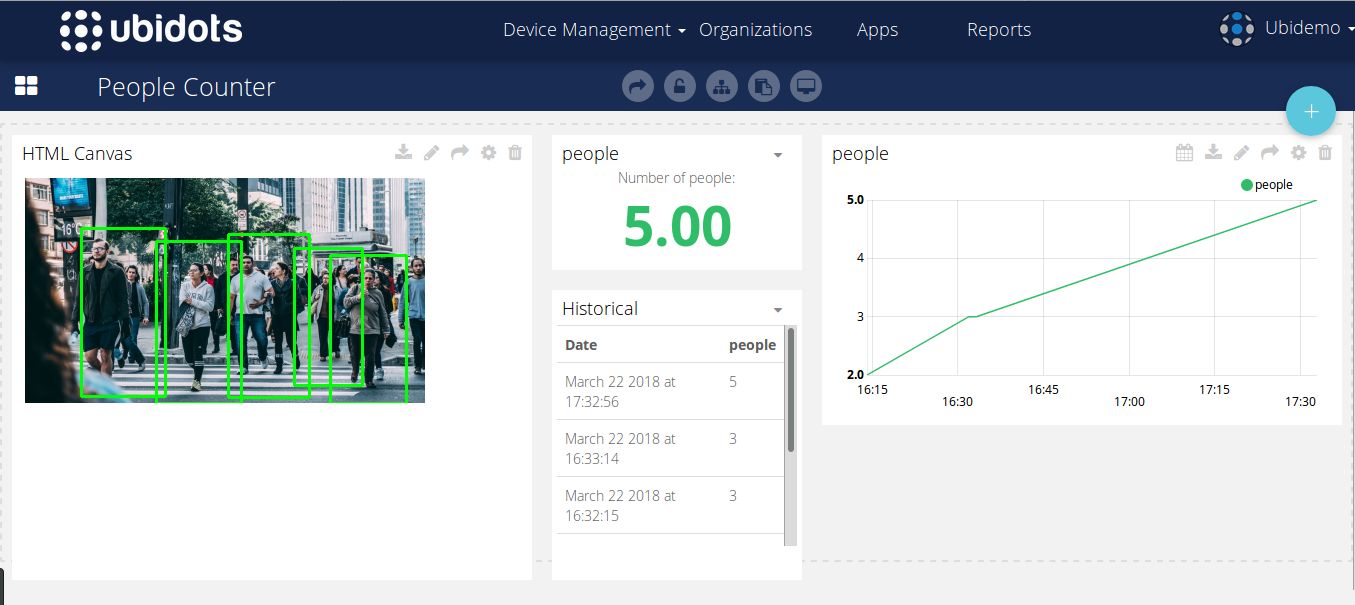

To begin your digital image processing application, Ubidots has created the following People Counting System tutorial using OpenCV and Python to analyze the number of people in a given area. Expand your applications beyond just people counting with the added resources of Ubidots IoT Development Platform. Here, you can see a real live people counting dashboard built with Ubidots.

In this article, we’ll review how to implement a simple DIP overlay to create a People Counter using OpenCV and Ubidots. This example works best with any Linux-based distribution and also in a Raspberry Pi, Orange Pi or similar embedded systems.

For additional integration inquiries, reach out to Ubidots Support and learn how your business can take advantage of this value-adding technology.

Table of Contents:

- Application Requirements

- Coding – 8 sub-sections

- Testing

- Creating your Dashboard

- Results

1) Application Requirements

- Any embedded Linux with a derived version of Ubuntu

- Python 3 or newer installed in your OS.

- OpenCV 3.0 or greater installed in your OS. If using Ubuntu or its derivatives, follow the official installation tutorial or run the command below:

pip install opencv-contrib-python

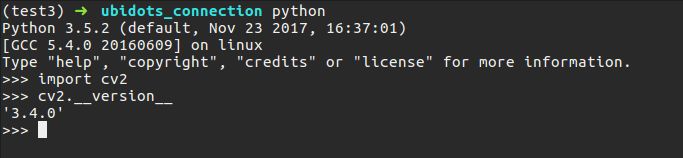

- Once you’ve successfully installed Python 3 and OpenCV, check your configuration by running this small piece of code (simply type ‘python’ in your terminal):

import cv2 cv2.__version__

You should get a print screen with your OpenCV version:

- Install Numpy by following the official instructions or just running the command below:

pip install numpy

- Install imutils

pip install imutils

- Install requests

pip install requests

2) Coding

The whole routine for detection and sending data can be found here. For a better explanation of our coding, we will split the code into eight sections to better explain each aspect of the code for your better understanding.

Section 1:

from imutils.object_detection

import non_max_suppression

import numpy as np

import imutils

import cv2

import requests

import time

import argparse

URL_EDUCATIONAL = "http://things.ubidots.com"

URL_INDUSTRIAL = "http://industrial.api.ubidots.com"

INDUSTRIAL_USER = True # Set this to False if you are an educational user

TOKEN = "...." # Put here your Ubidots TOKEN

DEVICE = "detector" # Device where will be stored the result

VARIABLE = "people" # Variable where will be stored the result

# Opencv pre-trained SVM with HOG people features

HOGCV = cv2.HOGDescriptor()

HOGCV.setSVMDetector(cv2.HOGDescriptor_getDefaultPeopleDetector())In Section 1 we import the necessary libraries to implement the our detector, imutils is an useful library tool for DIP and will let us perform different transformations from our results, cv2 is our OpenCV Python wrapper, requests will let us send our data/results over HTTP to Ubidots, and argparse will let us read commands from our command terminal inside our script.

IMPORTANT: Do not forget to update this code with your Ubidots Account TOKEN, and if you are an educational user, be sure to set the INDUSTRIAL_USER to FALSE .

After the library is imported, we will initialize our Histogram Oriented Object descriptor. HOG, for short, this is one of the most popular techniques for object detection and has been implemented in several applications with successful results and, to our fortune, OpenCV has already implemented in an efficient way to combine the HOG algorithm with a support vector machine, or SVM, which is a classic machine learning technique for prediction purposes.

This declaration: cv2.HOGDescriptor_getDefaultPeopleDetector() calls to the pre-trained model for people detection of OpenCV and will feed our support vector machine feature evaluation function.

Section 2

def detector(image):

'''

@image is a numpy array

'''

image = imutils.resize(image, width=min(400, image.shape[1]))

clone = image.copy()

(rects, weights) = HOGCV.detectMultiScale(image, winStride=(8, 8),

padding=(32, 32), scale=1.05)

# Applies non-max supression from imutils package to kick-off overlapped

# boxes

rects = np.array([[x, y, x + w, y + h] for (x, y, w, h) in rects])

result = non_max_suppression(rects, probs=None, overlapThresh=0.65)

return result

The detector() function is where the ‘magic’ happens, it receives an RGB image split into three color channels. To avoid performance issues we resize the image using imutils and then call the detectMultiScale() method from our HOG object. The detect-multi-scale method then let’s us analyze the image and know if a person exists using the classification result from our SVM. The parameters of this method are beyond the scope of this tutorial, but if you wish to know more refer to the official OpenCV docs or check out Adrian Rosebrock’s great explanation.

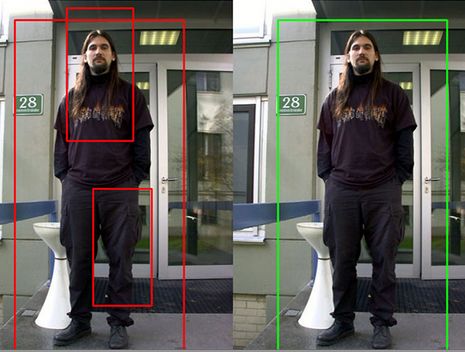

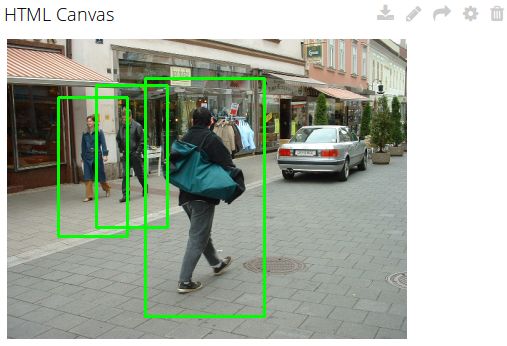

The HOG analysis will generate some capture-boxes (detected objects), but sometimes these boxes are overlapping causing false positives or detection errors. To bypass this confusions we will use the non-maxima suppression utility from the imutils library to remove the overlapping boxes – as displayed below:

Pictures reproduced from https://www.pyimagesearch.com

Section 3:

def localDetect(image_path):

result = []

image = cv2.imread(image_path)

if len(image) <= 0:

print("[ERROR] could not read your local image")

return result

print("[INFO] Detecting people")

result = detector(image)

# shows the result

for (xA, yA, xB, yB) in result:

cv2.rectangle(image, (xA, yA), (xB, yB), (0, 255, 0), 2)

cv2.imshow("result", image)

cv2.waitKey(0)

cv2.destroyAllWindows()

return (result, image)

Now, in this part of our code, we must define a function to read an image from a local file and detect any persons in it. To accomplish this, you will see that I have simply called the detector() function and added a simple loop to paint the round-boxes for the detector. It returns the number of detected boxes and the image with the painted detection. Then, just simply recreate the result in a new OS window.

Section 4:

def cameraDetect(token, device, variable, sample_time=5):

cap = cv2.VideoCapture(0)

init = time.time()

# Allowed sample time for Ubidots is 1 dot/second

if sample_time < 1:

sample_time = 1

while(True):

# Capture frame-by-frame

ret, frame = cap.read()

frame = imutils.resize(frame, width=min(400, frame.shape[1]))

result = detector(frame.copy())

# shows the result

for (xA, yA, xB, yB) in result:

cv2.rectangle(frame, (xA, yA), (xB, yB), (0, 255, 0), 2)

cv2.imshow('frame', frame)

# Sends results

if time.time() - init >= sample_time:

print("[INFO] Sending actual frame results")

# Converts the image to base 64 and adds it to the context

b64 = convert_to_base64(frame)

context = {"image": b64}

sendToUbidots(token, device, variable,

len(result), context=context)

init = time.time()

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# When everything done, release the capture

cap.release()

cv2.destroyAllWindows()

def convert_to_base64(image):

image = imutils.resize(image, width=400)

img_str = cv2.imencode('.png', image)[1].tostring()

b64 = base64.b64encode(img_str)

return b64.decode('utf-8')

Similar to the function of Section 3, this Section 4 function will call the detector() method and paint boxes and the image will be retrieved directly from the webcam using the VideoCapture() method from OpenCV. We have also modified the official OpenCV slightly to get images from the camera and send the results to an Ubidots account every “n” seconds (the sendToUbidots() function will be reviewed later in this tutorial). The function convert_to_base64() will convert your image to a base 64 string, this string is very important for watching our results in Ubidots using JavaScript code inside an HTML Canvas widget.

Section 5:

def detectPeople(args):

image_path = args["image"]

camera = True if str(args["camera"]) == 'true' else False

# Routine to read local image

if image_path != None and not camera:

print("[INFO] Image path provided, attempting to read image")

(result, image) = localDetect(image_path)

print("[INFO] sending results")

# Converts the image to base 64 and adds it to the context

b64 = convert_to_base64(image)

context = {"image": b64}

# Sends the result

req = sendToUbidots(TOKEN, DEVICE, VARIABLE,

len(result), context=context)

if req.status_code >= 400:

print("[ERROR] Could not send data to Ubidots")

return req

# Routine to read images from webcam

if camera:

print("[INFO] reading camera images")

cameraDetect(TOKEN, DEVICE, VARIABLE)

This method is intended to get the arguments inserted through your terminal and trigger a routine that searches for people in a locally stored image file or through your webcam.

Section 6:

def buildPayload(variable, value, context):

return {variable: {"value": value, "context": context}}

def sendToUbidots(token, device, variable, value, context={}, industrial=True):

# Builds the endpoint

url = URL_INDUSTRIAL if industrial else URL_EDUCATIONAL

url = "{}/api/v1.6/devices/{}".format(url, device)

payload = buildPayload(variable, value, context)

headers = {"X-Auth-Token": token, "Content-Type": "application/json"}

attempts = 0

status = 400

while status >= 400 and attempts <= 5:

req = requests.post(url=url, headers=headers, json=payload)

status = req.status_code

attempts += 1

time.sleep(1)

return req

These two functions of Section 6 are the highway for sending your results to Ubidots for understanding and visualizing your data. The first function def buildPayload builds the payload inside the request, while the second function def sendToUbidots receives your Ubidots parameters (TOKEN , the variable, and device labels) to store the results. Which in this case is the length of the detected round-boxes by OpenCV. Optionally, a context can also be sent to store the results as an base64 image so it can be retrieved later.

Section 7:

def argsParser():

ap = argparse.ArgumentParser()

ap.add_argument("-i", "--image", default=None,

help="path to image test file directory")

ap.add_argument("-c", "--camera", default=False,

help="Set as true if you wish to use the camera")

args = vars(ap.parse_args())

return args

Now in Section 7, we are reaching the end of our code analysis. The function argsParser() simply parses and returns as a dictionary the arguments passed through your terminal to our script. There will be two arguments within the Parser:

- image: The path to the image file inside your system

- camera: A variable that if set to ‘true’ will call the cameraDetect() method.

Section 8:

def main():

args = argsParser()

detectPeople(args)

if __name__ == '__main__':

main()

Section 8 and the final piece of our code is the main() function which simply calls the arguments from the console and launches the specified routine.

Don’t forget, the entire code can be pulled from the Github here.

3) Testing

Open your favorite processor text (sublime-text, notepad, nano, etc) and copy and paste the full code available here. Update the code with your specific Ubidots TOKEN and save your file as “peopleCounter.py.”

With your code properly saved, let’s test the next four random images selected from the Caltech Dataset and Pexels public dataset:

To analyze these images, first your must store the images on your laptop or PC and track the path to analyze the images.

python peopleCounter.py PATH_TO_IMAGE_FILE

In my case, I stored the images in a path labeled as ‘dataset.’ To execute a valid command, run the below command but with your image’s path.

python peopleCounter.py -i dataset/image_1.png

If you wish to take images from your camera instead of a local file, just simply run the command below:

python peopleCounter.py -c true

Test results:

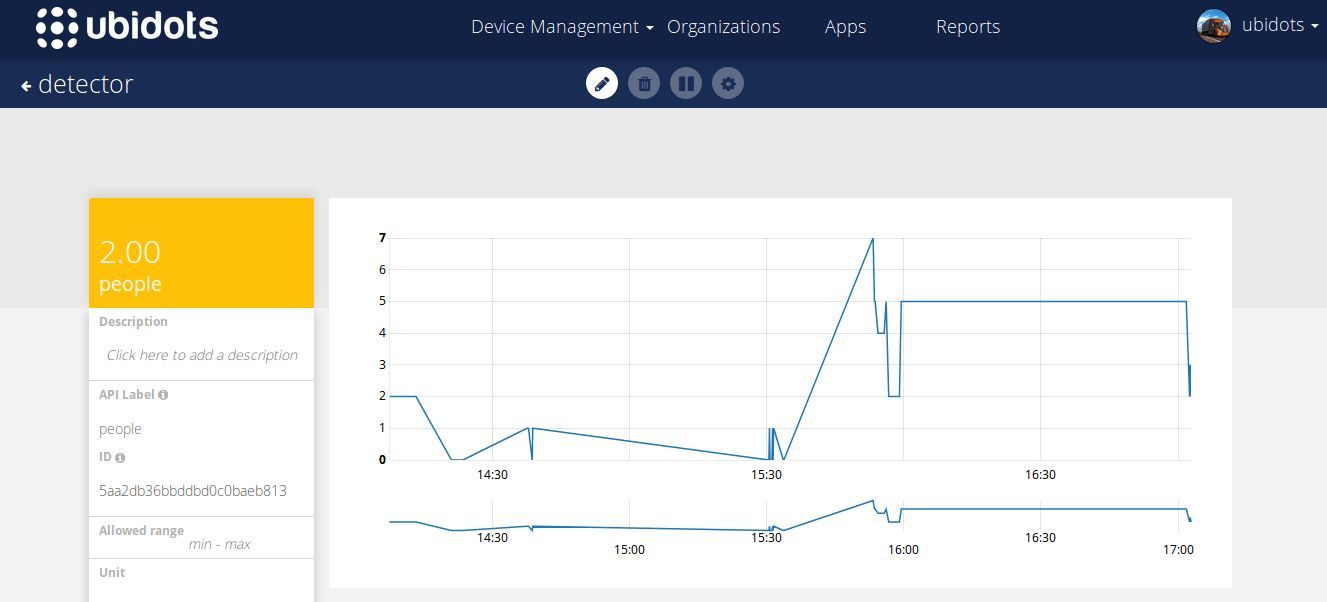

In addition to these testing checks, you will also see, in real-time, the results of these tests stored in your Ubidots account:

4) Creating your Dashboard

We will use an HTML Canvas to watch in real time our results, this tutorial is not intended for HTML canvas widgets, so if you do not know how to use them please refer to the articles below:

We will use the basic real time example with minor modifications to watch our images. Below you can see the widget’s code snippet

HTML

<img id="img" width="400px" height="auto"/>

JS

var socket;

var srv = "industrial.ubidots.com:443";

// var srv = "app.ubidots.com:443" // Uncomment this line if you are an educational user

var VAR_ID = "5ab402dabbddbd3476d85967"; // Put here your var Id

var TOKEN = "" // Put here your token

$( document ).ready(function() {

function renderImage(imageBase64){

if (!imageBase64) return;

$('#img').attr('src', 'data:image/png;base64, ' + imageBase64);

}

// Function to retrieve the last value, it runs only once

function getDataFromVariable(variable, token, callback) {

var url = 'https://things.ubidots.com/api/v1.6/variables/' + variable + '/values';

var headers = {

'X-Auth-Token': token,

'Content-Type': 'application/json'

};

$.ajax({

url: url,

method: 'GET',

headers: headers,

data : {

page_size: 1

},

success: function (res) {

if (res.results.length > 0){

renderImage(res.results[0].context.image);

}

callback();

}

});

}

// Implements the connection to the server

socket = io.connect("https://"+ srv, {path: '/notifications'});

var subscribedVars = [];

// Function to publish the variable ID

var subscribeVariable = function (variable, callback) {

// Publishes the variable ID that wishes to listen

socket.emit('rt/variables/id/last_value', {

variable: variable

});

// Listens for changes

socket.on('rt/variables/' + variable + '/last_value', callback);

subscribedVars.push(variable);

};

// Function to unsubscribed for listening

var unSubscribeVariable = function (variable) {

socket.emit('unsub/rt/variables/id/last_value', {

variable: variable

});

var pst = subscribedVars.indexOf(variable);

if (pst !== -1){

subscribedVars.splice(pst, 1);

}

};

var connectSocket = function (){

// Implements the socket connection

socket.on('connect', function(){

console.log('connect');

socket.emit('authentication', {token: TOKEN});

});

window.addEventListener('online', function () {

console.log('online');

socket.emit('authentication', {token: TOKEN});

});

socket.on('authenticated', function () {

console.log('authenticated');

subscribedVars.forEach(function (variable_id) {

socket.emit('rt/variables/id/last_value', { variable: variable_id });

});

});

}

/* Main Routine */

getDataFromVariable(VAR_ID, TOKEN, function(){

connectSocket();

});

connectSocket();

//connectSocket();

// Subscribe Variable with your own code.

subscribeVariable(VAR_ID, function(value){

var parsedValue = JSON.parse(value);

console.log(parsedValue);

//$('#img').attr('src', 'data:image/png;base64, ' + parsedValue.context.image);

renderImage(parsedValue.context.image);

})

});

Do not forget to put your account TOKEN and the variable ID at the beginning of the code snippet.

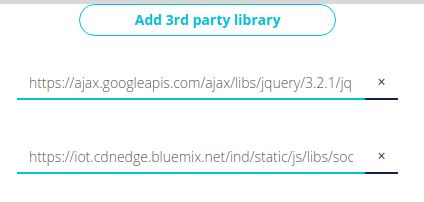

THIRD PARTY LIBRARIES

Add the next third party libraries:

- https://ajax.googleapis.com/ajax/libs/jquery/3.2.1/jquery.min.js

- https://iot.cdnedge.bluemix.net/ind/static/js/libs/socket.io/socket.io.min.js

Once you save your widget, you should get something like the one below:

5) Results

You can watch the dashboards with the results in this link.

In this article, we have explored how to create an IoT People Counter using DIP (image processing), OpenCV, and Ubidots. With these services, your DIP application is far more accurate than PIR or other optical sensors when detecting and identifying the differences between people, places, or things – giving you an efficient people counter without all the static of early data manipulation.

Let us know what you think by leaving Ubidots a comment in our community forums or connect with Ubidots simply with Facebook, Twitter or Hackster.

Happy Hacking!